7 Misconceptions About Google And search engine optimisation

The sphere of search engine optimisation previously has been very well-known for false info or misunderstanding. That is largely as a consequence of Google, as a result of it is sort of a black field containing a variety of arduous algorithms, experiences, and measurements.

However now, Google is beginning to change into extra clear by means of many public actions with the search engine optimisation neighborhood.

This text goals to eradicate the pre-existing misconceptions of many individuals within the search engine optimisation neighborhood. Here’s a listing of seven frequent rumors about Google and search engine optimisation in addition to why we predict so.

1. Google penalizes duplicate content material

1. Google penalizes duplicate content material

The penalty for duplicate content material doesn’t exist. Google doesn’t penalize web sites with duplicate content material.

Google understands that duplicate content material is a pure a part of the online. It can robotically index the very best high quality pages and match the searchers to return question outcomes.

Except you might be intentionally creating duplicate content material to regulate rankings. Consequently, the present web page rank (already in good rankings) is modified by the substitute model.

SEOer can inform Google concerning the web page they need or don’t need to index for managing purposefully created duplicate content material. This may be accomplished by some guidelines or sitemap building.

Related article: Why Are You Not Copying Articles From Different Web sites?

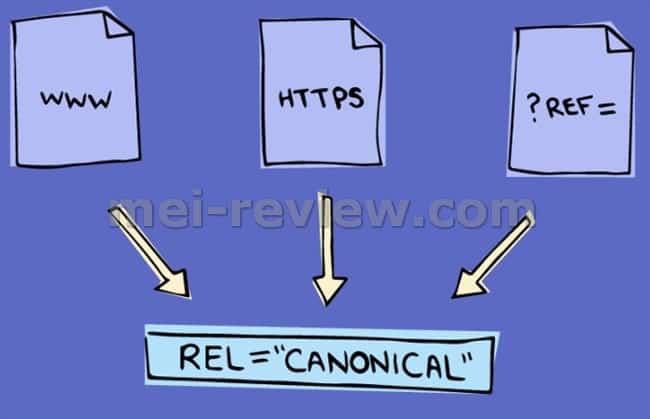

2. Google respects Canonical URLs as the popular model for indexing

You set the popular model of the URL to index by means of the Canonical tag, which doesn’t imply that the web page might be chosen by Google for indexing.

Canonical tags are thought-about by Google to be a sign for a favourite web page however aren’t at all times revered. Canonical variations could also be flagged.

Google could select a unique web page than the one you tagged with Canonical to change into a greater candidate in search outcomes.

In such circumstances, you should contemplate whether or not the Canonical tagging web page is basically the web page you need to be listed. In that case, take a look at all of the indicators to test in the event that they level to this model. It’s much more necessary to make sure the consistency of Google to the web page tagged with Canonical.

3. Punishment happens from algorithms

We frequently suppose issues round Google Panda, Phantom, or Fred… when up to date is usually penalized web sites.

There isn’t any such factor as punishment, it’s actually simply recalculation. It’s like a black field with a recipe built-in, once you put one thing bizarre in, it modifications. The proof right here is that your rankings change. The rise or lower of rankings is because of the formulation you set as true, affordable, or false.

And the black field has modified to make it work higher, which makes what seems a little bit totally different. That doesn’t imply it’s important to be punished!

4. Google has the highest 3 rating components

There’s some info that: hyperlinks, content material, and RankBrain are the highest 3 rating components. However Mueller acknowledged that this info is not true over time.

Specializing in the person rating indicators just isn’t useful as a result of the algorithm in addition to the person is more and more complicated and complicated. As a substitute, SEOer ought to give attention to optimizing web sites to enhance person expertise.

This work is required for the aim of customers and must replace the newest developments from Google. That is additionally the rationale why specialised search engine optimisation firms may help you get to the highest sooner as a result of they usually obtain the newest details about search engine optimisation & Google.

5. Google’s Sandbox applies filters when indexing new internet pages

One other false impression comes from the way in which Google treats new web sites whereas indexing. The search engine optimisation neighborhood stated that Google utilized a separate filter for these pages to stop spam pages instantly after launch.

Google’s Mueller stated no filters have been utilized. Nevertheless, there could also be a set of algorithms used to index and rank new web sites.

6. Use the Disavow file to keep up a hyperlink profile of the location

An necessary factor of search engine optimisation’s duty is to truncate a web site’s backlink profile by rejecting low-quality hyperlinks or spam.

Through the years, Google’s algorithms have change into extra conscious of low-quality backlinks and know when they need to be ignored.

For now, it’s best to solely use the Disavow file when a web site receives handbook actions to take away the offending hyperlink.

For now, it’s best to solely use Disavow when a web site has acquired handbook motion to take away infringing hyperlinks.

7. Backlink worth comes from a high-quality area

Constructing a profitable hyperlink needs to be judged by the capability and relevance of the backlinks pointing to the touchdown web page first. And backlinks from authoritative domains are evaluated later

If an article is of poor high quality, and unrelated to your web site, Google will ignore the backlink from there due to inappropriate context.

Thank You For Your Time on My Web site!